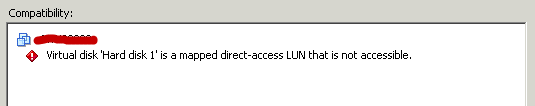

When you try to migrate a guest, that is using one or more RDM disks, you might see this message.

The reason this is most probably because the LUN IDs are different on the source and the destination ESX server.

One solution is:

- stop the guest

- write down the Physical LUN ID

- remove the RDM disk(s)

- vMotion the guest

- add the RDM disk(s) to the guest based on the Physical LUN ID

- start the guest

But why do this the hard (manual) way when we have PowerCLI ?

Automating the above scenario doesn’t look too difficult, but there are some pitfalls on the way.

Gathering the information

In this step I collect extensive information about the guest and the RDM disk(s) it has connected.

As a safety-measure, the collected information is dumped to a CSV file. This should allow, in case of script-failure, to restore the RDM mappings quite easily.

The collected information should also include to which controller the RDM disk is connected and the RDM’s unit number. This is important because otherwise the guest’s OS might mix up the drive lettering.

Removing the RDM

For this step I use a filter, called Remove-HD. This makes it easy to combine this in a pipe with the Get-Harddisk cmdlet.

I could have used PowerCLI’s Remove-Harddisk but this cmdlet leaves the mapping files on the datastore and thus makes your guest consume unused disk space.

The filter has two steps, the first one removes the RDM from the guest’s configuration (ReconfigVM_Task) and the second step removes (DeleteDatastoreFile_Task) the mapping file(s) from the datastore.

Connecting the RDM

This function (New-RawHardDisk) already appeared in the PowerCLI Community thread Adding an existing hard disk. I just added some logic to be able to add physical and virtual mode RDMs.

Main function

This is driving part of the script. It controls the logic and the order in which the different functions and filters are called.

The script

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 |

# ESX server against which you want to execute the script $tgtESX = "My-target-ESX-host" # Attach a RDM disk to a VM on a specified controller function New-RawHardDisk{ param($vm, $DeviceName, $DiskType, $controller, $unitnumber, $capacity) $vmMo = $vm | Get-View $spec = New-Object VMware.Vim.VirtualMachineConfigSpec $chg = New-Object VMware.Vim.VirtualDeviceConfigSpec $chg.operation = "add" $chg.fileoperation = "create" $dev = New-Object VMware.Vim.VirtualDisk $dev.CapacityInKB = $capacity $dev.Key = - 100 $back = New-Object VMware.Vim.VirtualDiskRawDiskMappingVer1BackingInfo $back.deviceName = $devicename if($DiskType -eq "RawPhysical"){ $back.compatibilityMode = "physicalMode" } else{ $back.compatibilityMode = "virtualMode" } $back.FileName = "" $dev.Backing = $back $dev.ControllerKey = $controller $dev.UnitNumber = $unitnumber $chg.device = $dev $spec.deviceChange += $chg $taskMoRef = $vmMo.ReconfigVM_Task($spec) $task = Get-View $taskMoRef while("running","queued" -contains $task.Info.State){ $task.UpdateViewData("Info.State") } $task.UpdateViewData("Info.Result") } # Remove a RDM disk from a VM and optionally delete the backing file filter remove-HD { param($Delete) $HDname = $_.Name $vm = Get-View -Id $_.ParentId # Get device key for the hard disk foreach($dev in $vm.Config.Hardware.Device){ if ($dev.DeviceInfo.Label -eq $HDname){ continue } } $spec = New-Object VMware.Vim.VirtualMachineConfigSpec $spec.deviceChange = @() $spec.deviceChange += New-Object VMware.Vim.VirtualDeviceConfigSpec $spec.deviceChange[0].device = New-Object VMware.Vim.VirtualDevice $spec.deviceChange[0].device.key = $dev.Key $spec.deviceChange[0].device.unitnumber = $dev.UnitNumber $spec.deviceChange[0].operation = "remove" $taskMoRef = $vm.ReconfigVM_Task($spec) $task = Get-View $taskMoRef while("running","queued" -contains $task.Info.State){ $task.UpdateViewData("Info.State") } $task.UpdateViewData("Info.Result") # Remove hard disk files (backing) from datastore if ($Delete){ $fileMgr = Get-View (Get-View ServiceInstance).Content.fileManager $datacenter = (Get-View (Get-VM $vm.name | Get-Datacenter).ID).get_MoRef() foreach($disk in $vm.LayoutEx.Disk){ if($disk.Key -eq $dev.Key){ foreach($chain in $disk.Chain){ foreach($fileKey in $chain.FileKey){ $name = $vm.LayoutEx.File[$fileKey].Name $taskMoRef = $fileMgr.DeleteDatastoreFile_Task($name, $datacenter) $task = Get-View $taskMoRef while("running","queued" -contains $task.Info.State){ $task.UpdateViewData("Info.State") } $task.UpdateViewData("Info.Result") } } continue } } } } # Main $report = @() # Report on all the VMs that have a RDM $vms = Get-View -ViewType VirtualMachine foreach($vm in $vms){ foreach($dev in $vm.Config.Hardware.Device){ if(($dev.gettype()).Name -eq "VirtualDisk"){ if(($dev.Backing.CompatibilityMode -eq "physicalMode") -or ($dev.Backing.CompatibilityMode -eq "virtualMode")){ $esx = Get-View $vm.Runtime.Host $lun = $esx.Config.StorageDevice.ScsiLun | where {$_.Uuid -eq $dev.Backing.LunUuid} $report += New-Object PSObject -Property @{ VMName = $vm.Name VMHost = ($esx).Name HDLabel = $dev.DeviceInfo.Label HDDeviceName = $dev.Backing.DeviceName HDFileName = $dev.Backing.FileName HDMode = $dev.Backing.CompatibilityMode HDSize = $dev.CapacityInKB LunDisplayName = $lun.DisplayName HDCtrlType = ($vm.Config.Hardware.Device | where {$_.Key -eq $dev.ControllerKey}).GetType().Name HDController = $dev.ControllerKey HDUnit = $dev.UnitNumber HDDiskMode = $dev.Backing.DiskMode LunCanonical = $lun.CanonicalName LunDeviceName = $lun.DeviceName } } } } } $report | Export-Csv "C:\VM-with-RDM.csv" -NoTypeInformation -UseCulture $report | Group-Object -Property VMName | % { $vm = Get-VM $_.Name # Stop the VM Write-Host "Stop VM" $_.Name $VM | Get-VMGuest | where {$_.State -eq "Running"} | Shutdown-VMGuest - Confirm:$false # Remove the RDM disk(s) $_.Group | % { Write-Host "`tremove HD" $_.HDLabel $vm | Get-HardDisk -DiskType ("Raw" + $_.HDMode.TrimEnd("Mode")) | Remove-HD - Delete:$true } # vMotion the VM Write-Host "Moving VM" $newvm = $vm | Move-VM -Destination (Get-VMHost -Name $tgtESX) # Attach the RDM disk(s) back $_.Group | % { Write-Host "`tAdd HD" $_.HDLabel New-RawHardDisk -Vm $newvm -DiskType ("Raw" + $_.HDMode.TrimEnd("Mode")) -DeviceName $_.LunDeviceName -Controller $_.HDController -UnitNumber $_.HDUnit -Capacity $_.HDSize } # Power on the VM Write-Host "Start VM" $_.Name $newvm | Start-VM - Confirm:$false } |

Annotation

Line 2: destination ESX server

Line 18-23: support for physical and virtual mode RDM

Line 118: dump the RDM info to a CSV file

Line 120-141: this is the part where the RDM disk(s) are removed, the VM vMotioned and the RDM disk(s) re-attached.

Line 136: because a guest can have more than one RDM I use the Group-Object cmdlet

Robdogaz

Great script works for what I need but one question, it is removing my NIC can you think of anything that would cause that?

I have 21 Physical RDMs and it removes them and readds them but removes the NIC (“Flexible”)

LucD

@Robdogaz, I can’t immediately see a reason why the script would remove the NIC from the VM.

One reason I know why this could happen is when there aren’t any free ports on the switch on the destination ESXi host.

It seems that vMotion doesn’t check if there are free ports on the switch on the destination.

But that shouldn’t remove the NIC, the NIC will still be there but it will be disconnected.

Is that the case with your VMs ? Are the NICs disconnected ?

Scott

I am having an issue with this script and I am wondering if you you could help. I am trying to remove a physical RDM and remap as a virtual RDM. I am having trouble remapping. In the VI console it states “Incompatible device backing specified for device ‘0’. Any ideas what might be going wrong? The script works if I try and remove and remap a physical RDM as a test. Thanks for any pointers.

LucD

Hi Scott, these functions are quite old and the PowerCLI build at that time couldn’t handle RDMs correctly.

In the current build you should be able to do the same thing with the Remove-Harddisk and the New-Harddisk cmdlets.

Josh Feierman

Thank you, thank you, thank you for this script. I recently had to move a half dozen VMs with over three dozen RDMs in total. With a little bit of tweaking it saved me many hours of mindless GUI clicking, not to mention a very sizable risk of fat-fingering something. Nice work!

Michael

Hello Luc,

Thank you for the great script. I’m trying to use it in two steps (two separate scripts) for our SRDF failover. First step (one script) to create the RDM report and the second step to import the CSV report and use that data to attach the RDM. The disk order is important as we have VMs with many RDMs,

The first part works great, export the report and remove the RDM and backing file, but I’m having difficulty importing the CSV and connecting the RDM back to its VM.

It goes through importing the CSV and I can see the variables populate with the data, but at the end I get I get errors about null argument and methods.

Can you help please?

LucD

@Michael, can you send me the scripts you are using, so I can have a look.

Send it to lucd (at) lucd (dot) info

Gert Van Gorp

Hi Luc,

Hope you had a nice trip back van SF.

As I told you in SF I am writing a PS script with a gui to move / clone VM’s with RDM”s connected. Thanks to your code I have come a far way.

The only thing I want to do with the above script is to clone VM-A (with VMDK & RDM) to antother location. But I ant to clone (the RDM is removed, the clone to VM-B is done, but the script does not wait untill the end of the clone operation to readd the RDM to VM-A. Is there a way to have the new-vm cmdlet wait until the clone is done before to move on with the next step in the script?

LucD

Thanks Gert, I did.

I’m afraid you have to look for another way to wait till the new VM-B is ready.

The New-VM cmdlet creates the new guest, creates the sysprep input files,copies the machine over and powers on VM-B. At that point the task is finished for New-VM.

On VM-B in the mean time the sysprep process is running which will require at least one reboot of VM-B.

If you can find a way that VM-B informs your script that the sysprep process is finished, you have a solution.

In a similar situation, I created a token-file, at the end of the sysprep process, on a share and let my script run in a loop till the token-file was present. But this requires network connectivity and membership.

Like I said at the beginning, this is not a New-VM problem but a sysprep problem. How do you find out, externally, that the sysprep process is finished.

Sorry I don’t have a clear solution for this.

John House

updated my email address.

John House

Hi Luc – thanks for doing that, it does make it a lot clearer – although i still cant seem to hack it for what I need.

Essentially, we are cloning VMs using the storage array and i’ve “written” (pinched) scripts to do that for normal vms (without RDM) – but we have some clusters that use RDMs and they do not clone well. So I need to 1) remove the RDMs from the cloned VMs (because these are invalid), 2) Readd the cloned RDM LUNs (which have different LUN ids to the originals).

Long shot – but do you know of any existing scripts (and i initially thought I could use this) – so I can pass it some parameters “VM Name”, “SCSI Controller ID”,”LUN ID” etc.. and it will add the RDM in physical compatibility mode to the VM?

Thanks again – ur a king in the community!

LucD

John, there are no stupid questions, just stupid answers 😉

The script run against all the VMs on a specific ESX (which you specify in line 2).

I have added a few comment lines to the script and indented the lines where appropriate.

Let me know if that helps ?

Luc.

Amit

hi Luc,

do you have script by vm-level migration

we have to migrate a lot of vm’s with physical RDM’s from one data center

to other data center with new compute and storage (HDS)

and i want to be able to run the script by VM and not by ESX level

thanks,

Amit

John House

Hi – stupid question, but how do you actually run this. I cant see anywhere where the VM is specified. Pasting the above code into PS doesnt do anything.

THanks

justasimpledude

So much typing !!

Why not copy and paste your script 😉 must have taken ages to type , instead why not do the following …much less effort!

* stop the guest

* write down the Physical LUN ID

* remove the RDM disk(s)

* vMotion the guest

* add the RDM disk(s) to the guest based on the Physical LUN ID

* start the guest

LucD

Thanks for the tip.

While I agree this would surely be faster than me typing the script, the script has it’s advantage when you have to migrate substantial numbers of guests with RDMs.

And you avoid making “human errors” 😉

Automation proves it’s worth when the task becomes repetitive and when you have to tackle bigger numbers.

shatztal

Come on man ,couldn’t you have post this 3 Weeks ago ?

i wanted to write a script’ but because i didn’t have the time ‘; it did not happend.

nice one .

BTW : The Problem happens also if the LUN ID is the same in Both Host.

LucD

Sorry that my timing was not optimal 😉

Thanks for the info on identical LUN ids, didn’t know that one.

nate

Even if the LUN ID is the same under certain circumstances the error can still pop up – https://www.techopsguys.com/2009/08/18/its-not-a-bug-its-a-feature/

Worked fine in ESX 3.0, 3.02, 3.5 (when the LUN ID is the same). I call it a bug, they call it a feature.

LucD

Thanks for the feedback.

At least you can now automate the tedious process of re-mapping all the RDM volumes 😉